TL;DR

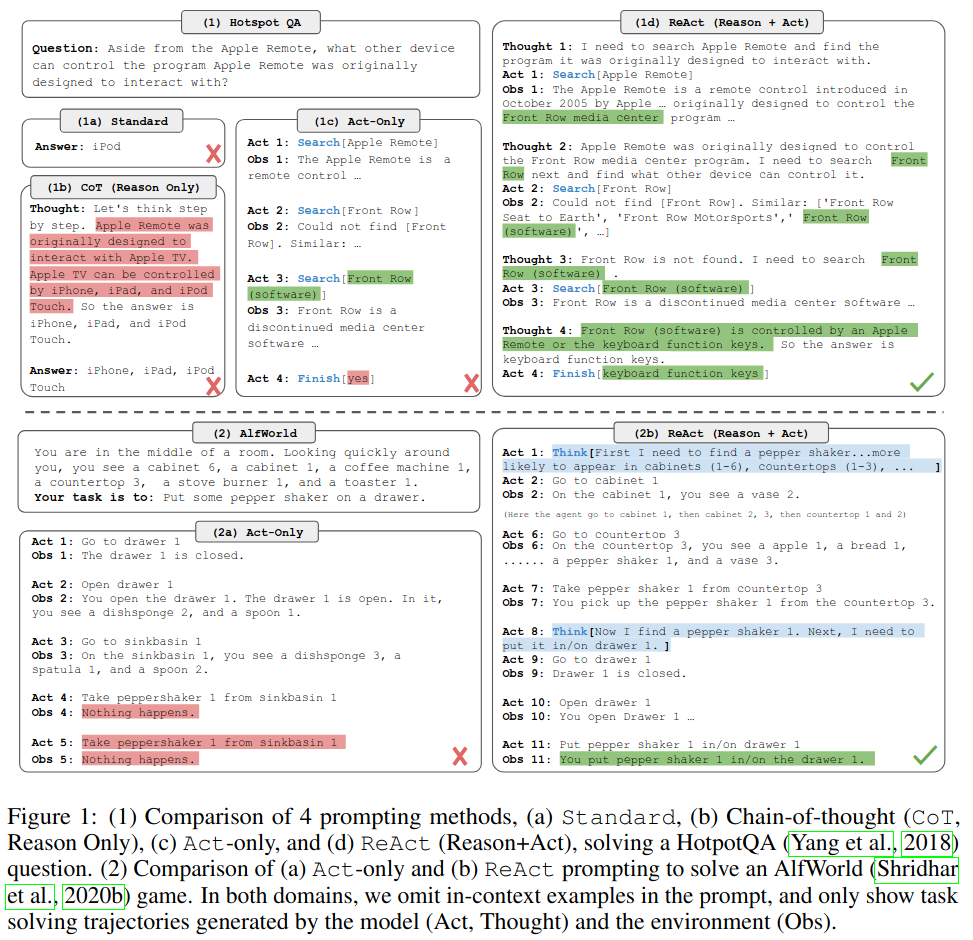

Large Language Models (LLMs) often suffer from hallucinations. Two common mitigation strategies are Chain of Thought (CoT), where the LLM is prompted to show its step-by-step reasoning, and Act, where LLMs use external tools to ground their answers in reliable databases. However, CoT relies on the model’s internal representations, limiting its ability to reason reactively or update its knowledge. ReAct is a prompting method that combines CoT with action plan generation using external tools. This approach significantly enhances LLM performance in decision-making and question answering.

ReAct

ReAct involves prompting an LLM with few-shot in-context examples to generate both domain-specific actions and free-form language thoughts for task solving. Each example includes a human trajectory of actions, thoughts, and environment observations:

- Thought: …

- Action: …

- Observation: …

In experiments, the following useful thoughts were observed:

- Decomposing task goals and creating action plans.

- Injecting commonsense knowledge relevant to task solving.

- Extracting important parts from observations.

- Tracking progress and transitioning action plans.

- Handling exceptions and adjusting action plans.

Limitations

- Dependency on in-context examples, which may not always be available

- Increased computational cost due to the need for generating both thoughts and actions

- Reliance on external tools, which may introduce latency or require integration efforts.